Midterm project

We tend to think of energy and waste as two separate streams. But producing (useful) energy produces waste. I read this article on solar waste a few months ago, and began wondering about waste from burning coal.

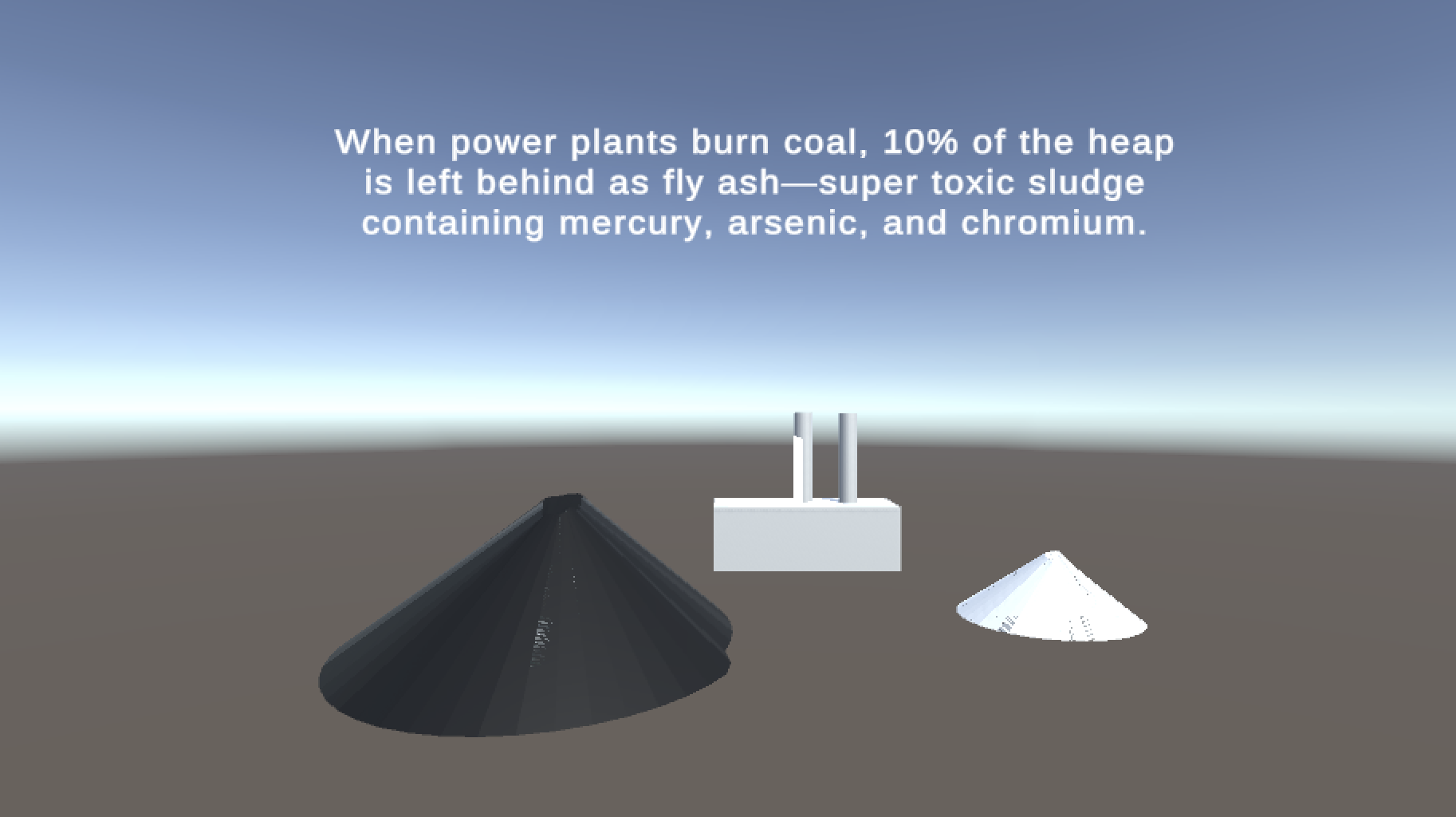

Coal, I learned, leaves 10-15% of its volume behind after burning, in the form of super-toxic coal ash. This ash leaks into waterways, blows into the air, gets stored in landfills. In the U.S. a chunk of the unimaginably-vast supply of this “resource” is re-used for road-building, as an ingredient in concrete. I found this article enlightening. It included this tidbits:

From 2000 to 2017, 118.4 million tons of coal ash were beneficially used, according to Earthjustice: Enough to cover a six-lane highway from Washington, D.C. to Sydney, Australia.

Virtual reality may not be an empathy machine, but it’s great at communicating scale in realer terms.

3D modeling

I’ve used Blender a tiny bit in high school, but this week I had to dust those few skills off and learn a redesigned UI. I modeled a basic coal power plant, a pile of coal, and a precisely-10%-the-volume pile of coal ash.

I used Blender’s native brick texture for the power plant building, and the metal shading for the smokestacks to give them a distinctive appearance. For the coal & ash, I installed the BlenderKit plugin to download the shaders, since those were more complex.

Unity

This was my first time using Unity, and I struggled.

One of the first challenges was the scene layout. When opening Game Mode, the scene was always empty. I discovered Blender installs a camera by default, which Unity was jumping to and ignoring the default Unity camera I configured. Inside the nested Blender scene in the Unity scene, I deleted the Blender camera and got the scene working again.

Adding typography was a challenge, but I’m fairly happy with the result. I imported a font (Geist), created a font asset, then set up a UI layer with text boxes. I learned the UI layer is tied to the camera, and not to the other objects in the scene, after initially struggling with getting the text in the camera frame.

The models I exported from Blender didn’t retain their procedurally-generated textures as .fbx or .usdz files, or importing the Blender file directly. I never figured out how to embed them or retain them. At the last minute, I downloaded stock raster image textures to apply to the models in Unity, but they didn’t look nearly as good as the procedural Blender ones.

The textures not transferring was detrimental for showing a modeled globe, because a sphere has none of the meaning of a globe. I downloaded different projections of Earth’s surface, and tried re-applying them in Unity, but they looked terrible, as you can see.

Feedback & future

Though I had an extensive story in mind for this project, the resulting Unity scene doesn’t hold a candle to what I was imagining. The modeling took more hours than I was expecting for a simple scene. Then while I assumed I could transfer the assets directly from Blender to Unity, the textures were the defining characteristic of the meaning of the scene. I added the text elements because I couldn’t reveal visually the point, but it didn’t take great advantage of the virtual environment.

Scale was a critical aspect of why this topic made sense to present in virtual reality. I got feedback, and felt personally, like the two mounds were not at the right absolute scale to feel important—but in tabletop AR, these are too small to be impactful. Utilizing the infinite space would be more effective.

I’d love to model further scenes to communicate the scale. A Walk Around the Block describes an average-size power plant in Minneapolis, MN that consumes fifty rail cars of coal every day. Demonstrating the rail cars arriving, and the amount of coal ash leftover, would more powerfully communicate how much is consumed.

Animation would be the next step. The repetitive motion of the train cars would communicate the speed of consumption, and being able to zoom into the highway model for the final scene would reveal how far that Pacific-crossing distance is.